Density and cost savings with Kubernetes

It is no secret I’m in a love relationship with Docker. Multiple times before I blogged about how “Docker is the most useful tool for all a computer can do” when showcasing some frontend work, but also “this is what I love with Docker: never worrying about uninstalling” while documenting devops/infrastructure to host backend code. It is incredibly convenient to be able to package both your application and all of its system requirements in a simple Dockerfile that enables it to just run anywhere, exactly the same as it does on your local machine. Pushing further, a few years ago I did put together a proof of concept running DC/OS at scale to host a microservices ecosystem of applications, and more recently I’ve been deploying a few heavily loaded applications on Amazon ECS with great success. It was just a matter of time before I jumped on Kubernetes, and couldn’t look back anymore…

Sharing some love for Kubernetes

First thing that struck me is how easy and clean it is to run Kubernetes on one’s laptop. Actually if you are working on a MacBook and obviously already installed Docker, it’s as easy as downloading minikube, the hyperkit driver (hyperkit is already installed with Docker, no need for VirtualBox!) and kubectl:

# minikube executable

curl -Lo minikube https://storage.googleapis.com/minikube/releases/v0.28.0/minikube-darwin-amd64 && chmod +x minikube && sudo mv minikube /usr/local/bin/

# hyperkit driver

curl -LO https://storage.googleapis.com/minikube/releases/latest/docker-machine-driver-hyperkit && sudo install -m 4755 docker-machine-driver-hyperkit /usr/local/bin/

# kubectl executable

curl -Lo kubectl https://storage.googleapis.com/kubernetes-release/release/v1.10.0/bin/darwin/amd64/kubectl && chmod +x kubectl && sudo mv kubectl /usr/local/bin/

Notice how those are just 3 files not polluting your laptop. Very easy to cleanup by just removing those files. Yet you can already start minikube:

minikube start --vm-driver hyperkit

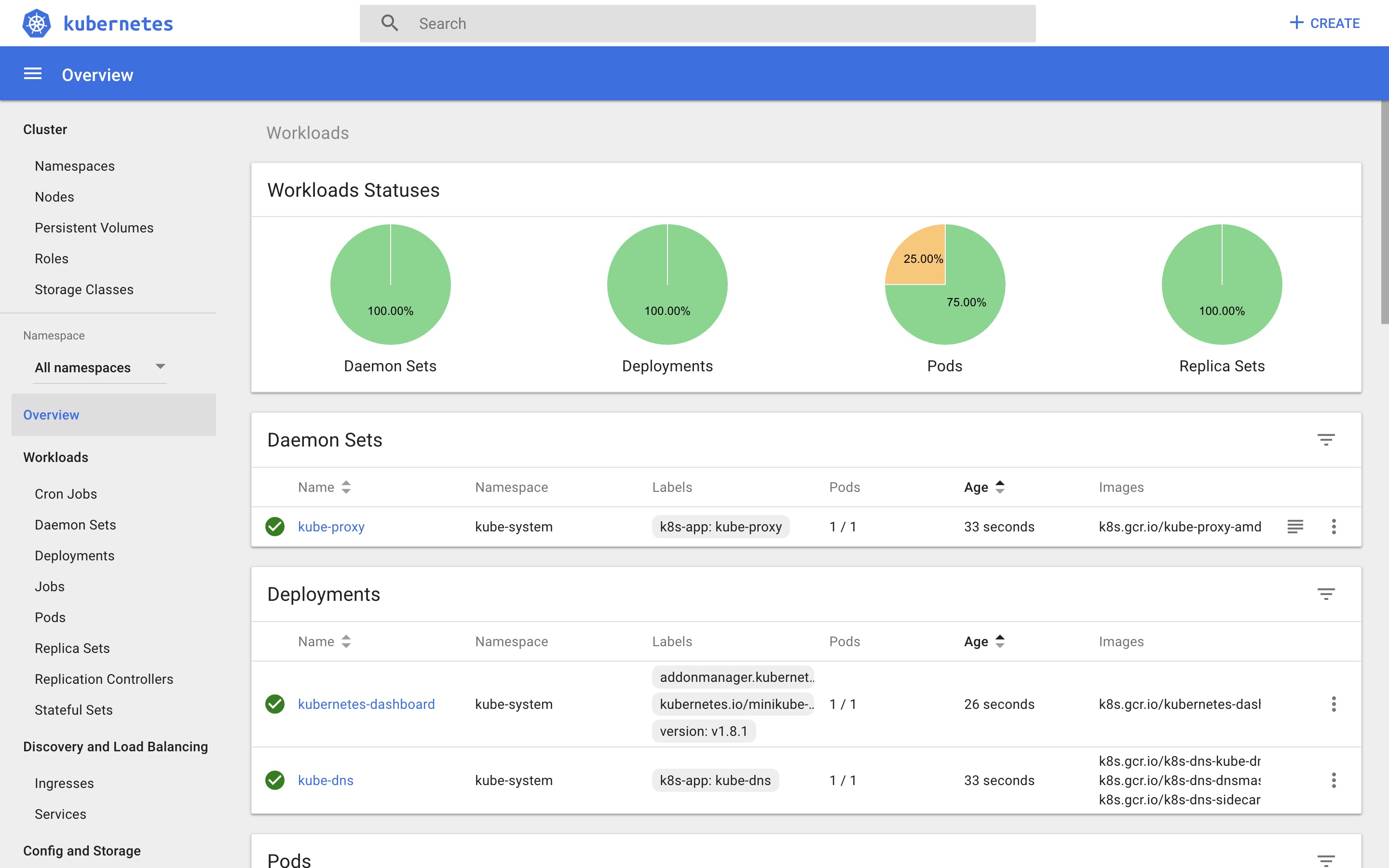

Now, you’ve got a locally hosted Kubernetes cluster at your fingertips! No kidding, a single instance, yet fully functional k8s cluster is waiting for you to play with. Just run minikube dashboard and:

From there, you can play with kubectl and start deploying your application from your local Docker images, along with any dependencies, right in your local Kubernetes cluster. Have a look at Helm, the amazing Kubernetes package manager, and the official stable Helm charts: deploying a CMS like Drupal, a database like Redis, a queuing system like RabbitMQ… never been easier, sky is the limit! Being that user friendly is a big win: no back and forth between devops and developers, anyone can thoroughly test automations, complex applications integrations and deployments, from the comfort of a laptop.

Stopping minikube is as easy as minikube stop, have a look at the minikube Readme to know more about what you can do with it.

Happy with what you’ve seen so far? Minikube is only one of many local-machine solutions, and there are dozens of hosted Kubernetes solutions, including services from the big 3: Amazon EKS, Google Kubernetes Engine and Microsoft Azure AKS. A pinch of automation, and your application is ready for some heavy production workloads!

Granularity leads to density

Here is the real deal when it comes to Kubernetes. Not only is it easy to play with locally, and somewhat easy to get your k8s application to a production grade level, backed by a major cloud provider: you’ve got full power and total control over compute resources you want to assign to your Pods and their encapsulated containers.

See by yourself on this extract from a Pod definition:

resources:

requests:

memory: "128Mi"

cpu: "200m"

limits:

memory: "128Mi"

cpu: "200m"

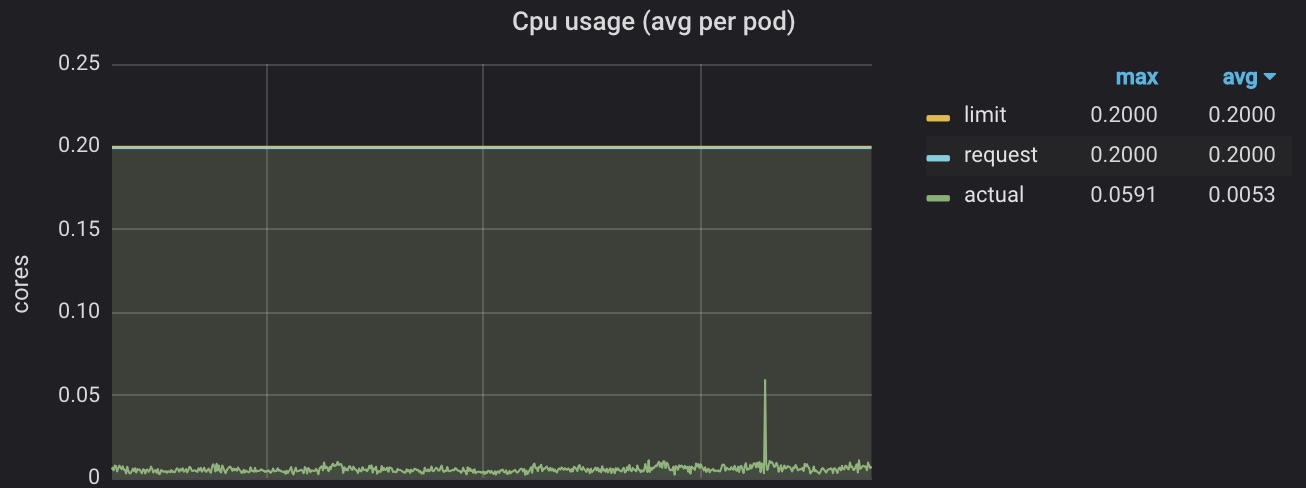

In this example we are assigning, as in requesting and limiting, 128MiB of memory and 200 milli (0.2, as in 1/5, yes just one fifth) of a Virtual CPU for some container to run with. Now that might sound awkward to someone not used to managing servers, be they virtual or not, but overall the average CPU and memory usage is never really pushed to the limit without unexpected consequences and unreliable latencies for your application. Having a dozen Kubernetes hosted containers, each running an API receiving requests per minute in the thousands, here is what the CPU usage looks like:

Sorry about the spike that is displayed as the maximum with 5.91% of a CPU core used! The average Node.js container running an API (Koa, Express…) will use roughly 100Mb of memory and as little CPU as you can see above, depending on what the code is doing, and obviously the traffic received. Same goes for .NET, Python and many others… This is about granularity, infinitely small resource management that empowers you to get more from your servers, by increasing the density of your applications. No doubt with just 1 reasonably sized machine you can host more than you ever thought of. Make that 3 smaller machines, deploy your Pods as a Deployment, and you achieve fault tolerance without even actioning it: the deployment controller will automatically re-provision your Pods across your machines if one ever goes down, in seconds.

Feel the love growing?

Density equals cost savings

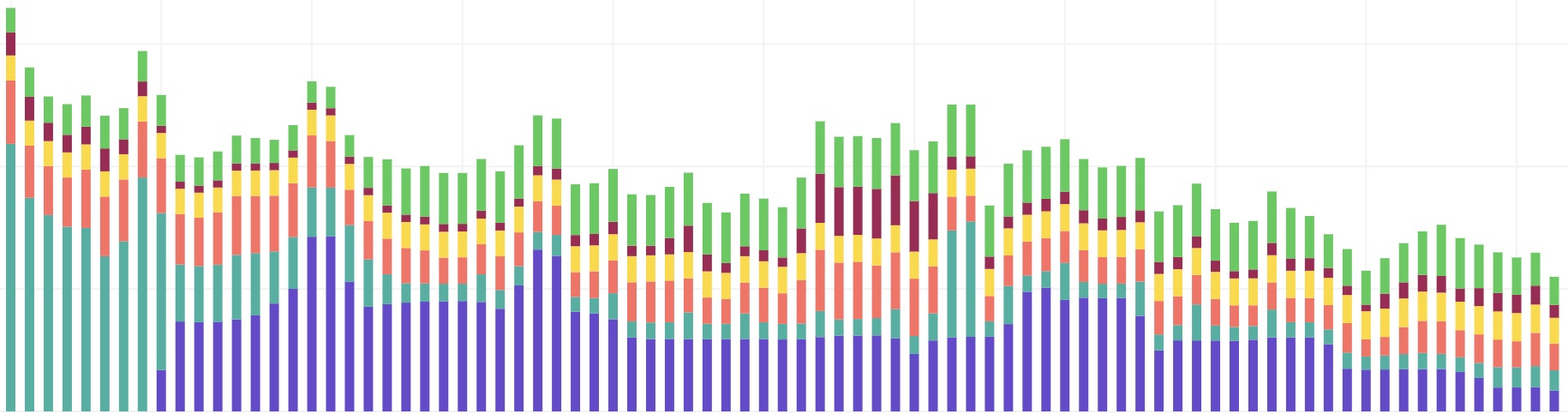

Now, here is the big deal with Kubernetes. Forget about fault tolerance, convenient local development, automation, and all of the Kubernetes goodies like DeamonSet, CronJob resources, or pre-made and production ready Helm charts. With this granular control over compute resources, increase the density of the applications deployed on your servers and that is how your cost reports will look like along the way:

Efficient, developer friendly, budget friendly… There is no questionning why the tech industry is moving to Kubernetes, or at least containerizing!

Is your developer experience rough and not reproducible on production environments? Are your infrastructure costs out of control? Need help getting your teams and applications to Kubernetes?

I would love to get your feedback! Get in touch right now by email.

- Kubernetes, Production-Grade Container Orchestration: https://kubernetes.io/

- Minikube, run Kubernetes locally: https://github.com/kubernetes/minikube

- The kubectl cheat sheet: https://kubernetes.io/docs/reference/kubectl/cheatsheet/

- Helm, the Kubernetes Package Manager: https://github.com/helm/helm

- Curated, stable Helm charts: https://github.com/helm/charts/tree/master/stable